What is A/B Testing? How it Works and When to Use It

Optimize product experience, marketing campaigns and the digital customer journey through dynamic A/B testing.

Originally published on January 24, 2022

Browse by category

The A/B testing method determines which of two versions of something produces the best results. It’s often referred to as “split testing” because groups of users are split into two groups (the “A” group and the “B” group) and funneled into separate digital experiences.

An A/B test can help you craft better-performing marketing campaigns or tweak product onboarding workflows. Changes to your product or feature can be tested on small, segmented groups called cohorts to verify effectiveness while minimizing friction.

A/B testing is a vital and reliable tool that can and should be used in a variety of situations. Product managers, marketers, designers and more who actively use A/B testing make data-backed decisions that drive real, quantifiable results.

Key takeaways

- An A/B test helps determine which of two different assets performs better.

- A/B tests are used to optimize marketing campaigns, improve UI/UX, and increase conversions.

- There are multiple versions of A/B tests for testing individual pages, multiple variables, and entire workflows and funnels.

- A/B tests should be segmented, validated, and repeatable for maximum results.

What Are The Benefits To A/B Testing?

A/B tests answer a basic question: Do customers prefer Option 1 or Option 2? In the world of digital products, the answer to this question is valuable in a number of situations, including two major fields: improving the customer experience and enhancing marketing campaigns.

Improving UI/UX

Well-intentioned tweaks to your product’s UI can have unintentional consequences that create friction for users. You may change the location of a tab in your mobile app to appeal to new users but accidentally frustrate existing users accustomed to its former spot.

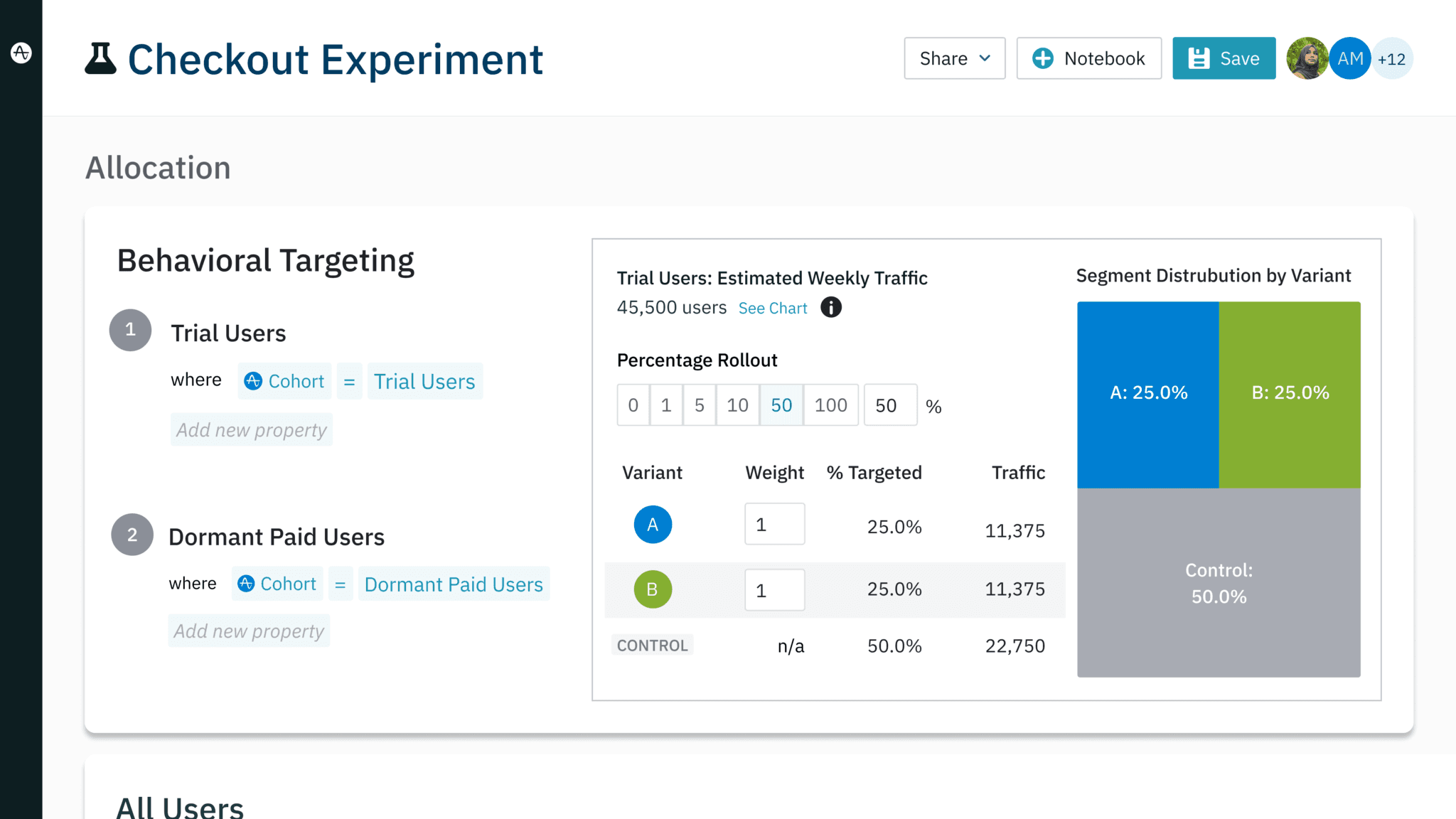

Performing a series of A/B tests minimizes the risk of making sweeping changes by first testing a smaller segment of your user base. For instance, you can create a small segment of new users in Amplitude Experiment and funnel them through the new iteration of your product with the tab relocated.

At the same time, you can create a similar group of new users but track them as they use your existing version. At the end of the experiment, you can compare the behaviors of the experimental group with the behaviors of your control group to see which version of your product produces the best results.

To get a sense of how existing customers will react to the alteration versus new users, you should repeat the experiment. This time, replace the experimental and control groups with segments of existing users. By running the experiment multiple times using different segments, you improve the chances that your changes fuel the adoption of your product instead of eliciting churn.

Optimizing Marketing Campaigns

A/B testing can be used to boost the performance of your marketing efforts. Your behavioral data is likely to reveal that customers who convert are likely to share similar behaviors or demographics. You know who you want to target, but the finer points prove elusive:

- What messaging proves most effective at fueling conversions?

- What offer or incentive do prospective or existing customers best respond to?

- Does a different design for a CTA button produce more clicks?

You could construct a campaign based on your experience and gut instincts and hope for the best, but this still carries risk. You can blast emails or paid social posts to the right people while missing the target with your message. The campaign may net many conversions, but you’ll never know if the options you didn’t choose would have brought in more.

A/B tests identify your best marketing options by providing data-backed results. A series of A/B tests may reveal that users respond better to green CTAs than blue ones or that a free trial offer performs at higher levels than a one-time discount. This process helps you minimize spend that would have been wasted on lesser-performing ads.

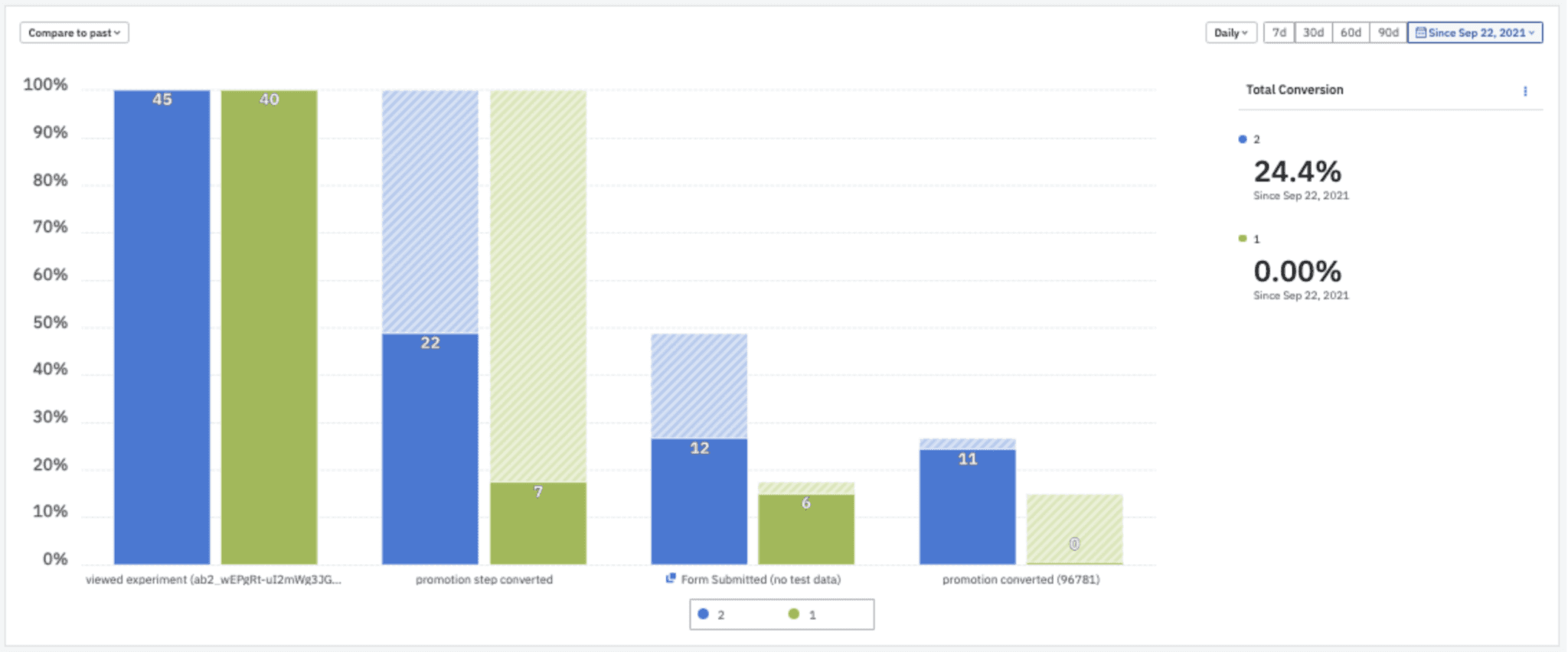

The A/B test view within the Funnel Analysis chart displays user movements through your funnel.

Real-World Examples of A/B Testing

Boosting Experiment Velocity

Lifull, one of the largest property portals in Japan, now has 1.5x faster experiment speeds for quicker and more relevant experimentation using Amplitude Experiment. Their product team can look for intricate patterns more frequently, helping them determine whether the chicken or the egg came first. Additionally, A/B success rates increased by 2.8x, which ensures more accurate testing, leading to a 10X increase in the number of user leads.

Increasing Retention By Optimizing Customer Experience

NBCUniversal embraced A/B testing as a means of reducing churn. The media giant tested their existing homepage for Vizio TVs against newer iterations. With help from Amplitude, the company identified a new homepage that increased viewership in the experiment group by 10%. NBCUniversal adopted the new homepage for all customers, a move which doubled 7-day retention.

Driving Innovation Through A/B Testing

The right analytics solution encourages more frequent experimentation, which in turn increases agility and fuels innovation. The team at GoFundMe used to require weeks to analyze A/B test results. With Amplitude’s ability to analyze results in real-time, GoFundMe was able to increase the number of tests they ran from two or three to 10 a month. Instead of having to pick and choose which ideas to test due to time constraints, the team can now test hypotheses as they develop.

What are the Types Of A/B Testing?

Besides conventional A/B testing, there are three kinds of A/B tests that can be leveraged depending on the situation:

1. Split URL Testing

Tweaking a button on your homepage is one thing, but what happens if you want to test out an entirely new page design? Split URL testing takes the concept of A/B testing and expands it to a grander scale. This type of test creates an entirely separate URL so you can completely redesign a webpage from the ground up. Your experimental group can then be funneled to this new page so results can be compared against your existing one.

Split URL testing and traditional A/B testing can be used together to optimize page performance. A split URL test will reveal which of the two designs of the page in question performs best. From there, a series of traditional A/B tests can test user preference for more minute details such as CTA copy, font size, or imagery.

2. Multivariate Testing

This type of experiment tests options that contain more than one variable. A traditional A/B test may assess which of two CTA button sizes is preferred. Comparatively, a multivariate test could include different CTA sizes, headlines, and images, allowing you to determine which of a slew of options performs best for your campaign.

Multivariate testing is helpful at confirming or negating your assumptions as to what asset out of many will perform best. You may believe that a certain combination of design elements performs best for your target base. By creating versions with alternative variables, you can test your preferred design against many others for an honest account of what performs best.

The chief drawback of multivariate testing is that each additional variable adds another version of the asset that needs to be created. If you want to test five different CTA button shapes, four CTA button colors, and three different fonts, you will need to create 60 (!) different test assets to cover all of your bases.

3. Multipage Testing

A multipage test gauges the success of an alternative version of workflows or funnel. Sweeping changes can be made to multiple pages in a sequence to build a separate funnel that can be tested against the original. A multipage test is also appropriate in situations where you’d simply like to add or remove one element from every page of a flow or funnel and test the effects.

Let’s say the checkout sequence for your ecommerce website looks like this:

Shopping Cart (A)→ Payment Info (A)→ Shipping Info (A)→ Review Order (A) → Submit Order (A)

You are curious whether moving the “Next Step” button on the first three pages helps or hurts purchase rates. You could theoretically test each page individually, but customers don’t experience these pages in isolation. They move from one page to the next in sequence.

You know the results of your experiment will be more accurate if you test the pages in order. Because of this, you create variations of your purchase sequence so that your experimental funnel looks like this:

Shopping Cart (B)→ Payment Info (B)→ Shipping Info (B)→ Review Order (A) → Submit Order (A)

Like any variation of A/B testing, the goal is to determine which funnel performs best against the original and even against other iterations. After testing a number of locations for your “Next Step” button, your final funnel may look more like this:

Shopping Cart (B)→ Payment Info (D)→ Shipping Info (C)→ Review Order (A) → Submit Order (A)

Tips When Running an A/B Test

As beneficial as A/B tests are to UI/UX design and product marketing, they have to be performed and evaluated correctly to unlock their true potential. The keys to A/B testing success include:

Repeatability

Sometimes, A/B tests provide results that are so exciting that they lead to impulsive decisions. One business saw that simply changing the color of its CTA button boosted its conversion rates by a factor of three. If your own A/B test resulted in a similar outcome, you might want to immediately run this version of your ad, and it would be hard to blame you.

However, test results are considered valid only if they are repeatable. Achieving the same results over and over again reduces the chance that your initial result was a fluke. Moreover, if you move forward with a campaign that utilizes blue CTA buttons without testing a red version, you’ll never know if you’re truly using the best CTA option.

User Segmentation

User segmentation is the process of creating a subset of specific users for your A/B tests. These user groups are based on customers of similar behavioral or demographic traits. In many A/B tests, you want to know how a particular group of customers reacts to changes that will affect them. In these cases, testing a broad range of customers may actually dilute the result from the segment you wish to target.

Imagine you’re considering aesthetic changes to your product’s chatbot. You know that customers who have used your product for more than three months are extremely unlikely to use the feature, so including them in an A/B test doesn’t make sense. Instead, you should create a segment of users who have used your product for fewer than three months to get the best sense of how your design changes affect those who will be using it.

Amplitude can even build a cohort of customers most likely to perform a certain action in the future. This means you can build a segment made entirely of new users who have been analytically predicted to use the chatbot based on past behaviors. An A/B test using a predictive cohort has a much higher likelihood of including the users you want to target while excluding people who are not interested in using a chatbot.

Testing Your Test

If you build your A/B test wrong, all of your testing is for naught. A flawed test may result in outcomes that don’t make sense despite user segmentation and repeated testing. Certainly, customers behave unpredictably from time to time, but there’s generally a pattern to their behavior.

Instead of creating two groups and funneling them to two separate experiences, test your product against itself in what is sometimes called an “A/A test.” In theory, the results from an A/A test should be remarkably similar since there is no variation between the two journeys. If the results come back skewed, it’s time to break your experiment down to the bolts and do a step-by-step analysis of where the issue is occurring.

3 Common A/B Testing Mistakes (And How to Avoid Them)

A/B tests provide valuable insights so long as they are performed correctly. Incorrect results can skew results and lead teams to make important decisions based on bad data. Some of the more common A/B testing mistakes include:

1. Testing Multiple Variables In a Single A/B Test

An A/B test is designed to test one variable at a time. Limiting the A and B choices to a single change allows you to know with certainty that this specific alteration was responsible for the final outcome.

Changing multiple variables opens test results up for interpretation. For instance, changing three aesthetic features might produce a better result for Ad A over Ad B, but there would be no way of knowing whether all three changes were responsible for the better performance. When tested individually, you might find that two of the changes actually hurt performance and the third change was solely responsible for the higher conversion rate.

Do yourself a favor and keep things simple: limit yourself to one variation per A/B test.

2. Testing Too Early

If you just set up a new landing page, it pays to hold off on A/B testing for a while. Altering elements on a new page right away prevents you from collecting necessary metrics on the original iteration’s performance. Without an established baseline, you don’t have the right data to test changes against.

3. Calling a Test Prematurely

You may be tempted to declare success after only a few days based on promising results. However, it’s best to let tests run for at least a couple of weeks to get more reliable data. A factor as simple as what day of the week it is or a holiday can provide skewed results. Testing for several weeks can provide a more realistic picture of how customers react to your changes over the course of time.

Power Your A/B Tests With Customer Data

Historical, demographical, and behavioral data are used in massive A/B tests by some of the world’s biggest companies. Amazon uses A/B testing to determine the efficacy of its powerful recommendation machine. Netflix harnessed A/B testing to justify the creation of its Top 10 lists. Considering the successes both Amazon and Netflix have had in their respective industries, it’s worth adding A/B testing to your product management tool belt. Check out our list of the top A/B testing tools you can use.

References

- 24 of the Most Surprising A/B Tests of All Time, WordStream. July 08, 2021.

- Using A/B testing to measure the efficacy of recommendations generated by Amazon Personalize, AWS. August 20, 2020.

- What is an A/B Test? The Netflix Tech Blog. September 22, 2021.

Wil Pong

Former Head of Product, Experiment, Amplitude

Wil Pong is the former head of product for Amplitude Experiment. Previously, he was the director of product for the Box Developer Platform and product lead for the LinkedIn Talent Hub.

More from Wil